- Home

- Evaluation neuropsych tests

A new evaluation of neuropsychological tests

Ga HIER naar de Nederlandse versie van deze pagina

Below you will find the basics of a new evaluation system for neuropsychological tests. I have developed this because the older evaluation system does not help or stimulate much to improve neuropsychological tests. Furthermore, with the old system it is much more complex to compare tests.

I hope you will like my suggested modern evaluation system. Enjoy the reading! But first of all, a short introduction into test psychology.

Short introduction of Test psychology

Test psychology, the study of developing, maintaining, and evaluating (neuropsychological) tests, is hardly popular in psychology students. I hope to show you that it should be. One of the key reasons for my plea is that neuropsychological tests are the most important tools that neuropsychologists use to say something about brain dysfunctions. So a lot depends on neuropsychological tests.

Neuropsychological tests are specific, standard procedures to let someone do something and then record how it is done and what the results are, especially to measure cognitive or brain functions. For example, asking someone to add 7 and 5 is a very simple test. It has a standard way of asking but can have different questions (adding 6 and 1, or 2 and 3). The outcome can simply be scored: right or wrong.

A more complex procedure uses more outcome measures such as the time taken to do the procedure, and more outcome results. Test psychology dictates to have enough outcome measures so the results can vary. When results vary, differences can be detected, according to test psychology. A simple memory procedure in which you have to remember just 2 words, has not enough variation. A lot of people will remember those 2 words so when their memories will differ, this simple memory measure will not be able to detect this.

When in test psychology a procedure has been developed, the essential point is to go and see what results are found when you administer this new test to normal people (meaning: not having a brain injury, supposedly having a normal brain) and to brain injury patients. Of course, you can not examine all people so you try to find a representative sample.

Again, test psychology teaches you that a sample has to be similar in many relevant aspects (i.e. age, education, sex) to the larger population. For example, when an attention test is meant for adult patients, I can not examine only children under 12 years of age. Of course, they will be quite different than adults and results will not be comparable.

Test psychology does not say much about what form tests can have. But, I certainly prefer computerized tests because they are easy to administer and most people like them. Also, the computer will not make mistakes in recording the results and delivers those results in a split-second.

Unfortunately, most psychologists are not that computer-savvy so they still work in so-called paper-and-pencil formats. Such instruments take more time to administer, more mistakes in recording the results are possible, and more variability in the way the test is administered is possible (it is more difficult to administer them in exactly the same standard way).

RELIABILITY is essential

Test psychology, has set up a lot of (statistical) rules to which neuropsychological tests have to abide. One fundamental rule is that a procedure must be reliable which means that when it measures something at one time, it has to be able to measure it in the same way at another time (so-called test-retest-reliability). This test-retest reliability is often expressed in a correlation: the correlation between the first and the second administration of the test. The correlation coefficient r should be around .70 or higher (=ideal situation).

Just like a blood pressure cuff which goes around your upper arm to measure your blood pressure. You surely want it to measure your blood pressure reliably so you are certain that different results are the result of your blood pressure changing instead of a measurement error.

Normally, reliability is determined by administering the instrument twice to the same group of patients or healthy people (the last ones are called 'controls' because their results are taken as thé norm measure). In ideal circumstances there are only several weeks between the two sessions because it is essential that the patients or the healthy people will not change in this period. Otherwise, results may change but then it is not clear whether this is due to the patients who could have changed or the test's instability.

Unfortunately, it is very difficult for developers to find people who actually do want to undergo a procedure twice in a couple of weeks. Therefore, in a lot of studies reliability has been examined in less ideal circumstances and sometimes not much can be said about reliability.

Neuropsychological tests differ in their reliability. There are tests where the re-test effect is very high: the results of the first and second administration are highly comparable. For example, this is the case when you do a test and quickly discover what its 'trick' is, so the next time you will do it your performance is far better than the first time.

Of course, some simple memory measures do have this 'problem'. When you have to remember 3 words and in about 2 weeks you have to remember the same 3 words, you will probably remember them from the first session and your performance will be 100% (the so-called 'ceiling-effect').

Another example of problems in re-test reliability, can be found in so-called executive function tests (=problem solving test). Such procedures require some strategic thinking in which you have to know the 'trick'. When you become aware of this trick at the first administration of the test, the next time you will do the test, it is most likely you will do the test much better. An example of such an executive function test is the Wisconsin Card Sorting test. The principle in this test is extremely easy and it does not help either that this knowledge has spread all over the Internet nowadays.

The solution for such unwanted problematic (high) test-retest reliability which is offered by developers and test psychology, is to develop so-called parallel forms: instruments that resemble each other and can be used interchangeably. But of course the problem with such similar forms is that you have to administer them at least once to all patients and healthy people. So they have to be willing to undergo a procedure at least twice. Such research or norming is rare and usually only done with very small groups of volunteers.

Again, the practical problem is that not many people have so much spare time to invest in this kind of research. To find people to take tests is a very large problem for developers. So people tend to get paid for such research, or usually only students are used. You can understand that in using that kind of 'tricks', it is far from certain that we will have a fair representative of áll people.

To summarize: in ideal circumstances test -retest reliability of a neuropsychological test should be high, at least .70. And this should be true not because of a test that is very simple to do, but because of the fact that indeed the test measure a cognitive function so reliably that a small change in this function can indeed be measured.

Validity: not essential but necessary

According to test psychology theory, validity means that a procedure does indeed measure what it is supposed to measure. When I want to use a memory procedure I have to be sure that this is indeed measuring something we call 'memory'. When asking to remember 3 words this is rather obvious. But when asking to remember 15 words, repeating them 5 times, it is a bit less clear what this procedure measures. That is because more cognitive processes (information processing) are needed in this task, such as attention, problem solving and perception.

Validity is one of the most challenging and difficult tasks for a developer and in test psychology literature a lot has been said about validity. In order to know however, if a neuropsychological test is valid, we need solid knowledge.

However, our scientific knowledge about brain functions is still severely limited. In the neurosciences we do not have very rock-solid models to explain what every brain region or function does exactly. For that, our tools are still rather primitive and our brain is far too complex to understand. So, we go by using a relatively large variety of models about memory, attention, problem solving.

In studies researchers try to find evidence for each model and such studies are published in a very broad range of journals. It would be easier to have only 2 or 5 journals but instead we have a few hundred brain journals.... So, we have to find some consensus about the different brain models and that is challenging, to say the least. Fortunately, most scientists do seem to agree on some specific models about your memory, attention and problem solving. So, there is some consensus about different brain functions.

But, when it comes to interpreting tests and what they are supposed to measure, consensus is not that easily found. Every researcher uses his own kind of procedure to demonstrate that a specific model is true. The result is that with some 'standard' neuropsychological tests, there are several different versions of it, sometimes more than 20! And when a procedure looks the same but has different instructions or is set up just a little bit different than another version, you are bound to get different results.

That is not reliable science and it is certainly not what test psychology recommends. However, due to the high publishing pressure put on scientists, they do not look that closely or carefully to these 'minor' details. They just assume in their studies that most procedures are the same so they can continue to say something about a model of memory (or attention). Furthermore, neuro-scientists are not test psychologists.

As a neuropsychological test developer I strongly disagree with this kind of attitude in doing science because to me, finding the real truth, or at least approaching it, is more important than getting an article published.

The current situation is even more worrying because clinicians depend on scientific journals. So their choices are influenced by science. And I have seen more than enough examples of bad (but accepted) science that has led to the use of tests in neuropsychological research that are really useless and even bad practice in clinical settings.

The sad story is that it is getting even worse: due to the fact that science largely works by consensus, a lot of bad tests are still being used and continued to being used. A lot of PhD dissertations are published in which conclusions are drawn using inadequate (but popular) neuropsychological tests. Such conclusions are then used by younger and more inexperienced clinicians and eventually such conclusions do get the status of 'absolute' truth.

I hope to expose this kind of erroneous way of doing science and using it in clinical practice on my pages about specific neuropsychological (i.e., attention) tests. And really, I can't blame the scientists because they are no test psychology experts and they are stuck in a repressive system of publication pressure.

Standardization

Another rule found in test psychology for a good neuropsychological test is that it should be administered in a standard way. When instructions are quite different in an otherwise same test procedure, results can be different because of it. When you have to remember 10 words and I'll give you two sorts of instructions like A. 'please remember especially the first 5 words' or B. 'please remember as much as you can', there is a possibility that in one procedure less words will be remembered (most likely in the one where the focus is forced to the first 5 words so there will be less attention paid to the other words).

Most tests do indeed have standard instructions which have to be spoken out exactly as they are written down. Psychologists are trained to do that, especially when they took courses in test psychology. In neuropsychological examinations such standard instructions are extremely important. Every single, seemingly minor change in administration can change the way someone thinks or how his attention is directed. That can immediately lead to a different outcome.

When you have an outcome measure like 5 words to be remembered, it is easy to understand that a small change in instructions can lead to a relatively large change in outcome when only 4 instead of 5 words are remembered.

In a lot of memory tests words are spoken aloud by the examiner, in a certain pace (usually 1 second per word is recommended). However, the problem with speaking aloud is that every voice is unique and therefore different. This leads to different articulations of the words to be remembered. Even the pace is different per examiner. We know that changing pace has an influence on recalling words in a list. So ideally, word lists should be recorded on a tape. And played with the same recorder with exactly the same speed.

Such kind of perfect standardization, recommended explicitly in test psychology, is still not being done, largely due to the fact that a lot of psychologists do not know much about a computer! But it is also due to the instructions in a lot of tests, where words or numbers have to be spoken aloud by the examiner.

Good test norms are essential

When a test is finally readily available, in a standard form, has sufficient reliability and validity, then it is essential to know what the results mean. The most important question of a neuropsychological result is: is it normal or abnormal? Does a score belong to a normal population (test psychology language) or does it belong to a group of brain damaged patients? And the second, related question is: hów abnormal is this score?

I can state here rather bluntly, that the large majority of most used and known neuropsychological tests are inadequately normed. Meaning that the norms usually are biased, not very representative of a normal population and too old. And this is largely due to the fact that norming instruments is a very time-consuming and therefore expensive business. And it is not the work of scientists but the work of developers (publishers).

There are so many different neuropsychological tests out there (with so much inadequate norms), that it is hardly impossible to decide wisely which of them to norm. The risk of wasting a lot of money - involved in norming - when you decide to norm a specific test which will not be used much, is relatively high. This more economic aspect of developing neuropsychological tests is not much discussed in test psychology handbooks.

In this sorry state of affairs we now live, all over the world. Competition, publishing morale, ego-centrism, this all has not led to a perfect science in which we all use a couple of very good neuropsychological tests that are used all over the world and therefore are normed extremely well.

The human enterprise, the how of making and distributing tests is nót something that is taught in test psychology courses. Maybe because that is real life... However, my dream still is, that it is possible to widely use some tests which then can be normed extremely well (globally).

And there are already tests which are used globally of course (especially the Wechsler sets and the Delis-Kaplan sets). But we still do not have a large reviewers forum in which shortcomings of such tests are discussed and suggestions for improvements are made. This as well, is my mission with these pages on test psychology, because I firmly believe this can be set up, freely. With your help.

Sometimes, norming an instrument is not really necessary. This happens when you are quite certain that normal individuals perform almost at a 100% rate in a specific procedure. This is the case with visual field procedures in which on a computer screen flashes are displayed in a high speed.

From biology we know that normal humans tend to see all things in their visual field, especially when those 'things' are light flashes. Even children do see them. So, in such a procedure missing more than say 2 flashes (or stimuli as they are called technically), is abnormal. No need for testing this with thousands of volunteers. I did not come across such kind of reasoning in test psychology books, however.

The same goes for simple neurological procedures such as lifting your right arm or pointing with your right finger to your nose with your eyes closed. For such procedures we already know that all normal human beings (without brain damage) can do them perfectly.

Ideally, a neuropsychological test should be that simple and at the same time be able to differentiate between a healthy human being and a brain damaged one. This kind of test psychology, actually test philosophy, should be taught more so that every psychologist learns to appreciate the test construction phases. And get inspired to even develop better tests.

Test availability

A good neuropsychological test should be readily available and customers (usually clinicians) should see quite effortlessly where it can be bought. Unfortunately, this is still not the case.

There is no central database where you can quickly find where to get an instrument. I am using two large handbooks to find this out. However, sometimes the publisher is bankrupt or the book has given outdated information. Then Google could save the day. Well, Google helps... a bit. Sometimes it takes some creativity and time to find a test.

Another problem with availability is that a procedure is not really commercially available. Sometimes, a test procedure has been developed specifically for a study and the researchers haven't considered the option that this procedure could or would become popular. Researchers are of course, no test developers or test publishers. So, to get such a newly developed test is a problem because it has not been developed in a form that can be distributed widely.

Clinical usability or 'friendliness'

Another criterion for a neuropsychological test does not exist, scientifically speaking: clinical usability. It is my personal one. Because, when having tested more than 3000 patients myself, and some 300 normal persons, I discovered that a procedure has to be 'fun' as well as reliable and valid. When someone does not like a test, chances are that his performance is far less than optimal. Not because someone can not do it. No, because someone does not wánt to do it.

As a neuropsychologist I have methods to detect such lesser motivation, but when using a not so friendly test, the damage has already been done. All I can say then, is that the test performance was not optimal due to motivational problems.

It would be much better though, to have a test where such motivational problems would not be a problem at all. A test developer should think about this carefully. There are examples of tests where the drop-out rate is very high indeed: people get extremely stressed and the result is that they do not want to do the test (or worse: other tests as well) anymore. This harms your neuropsychological investigation in such a way, that no valid conclusions can be drawn about cognitive (brain) functions.

Friendliness or clinical usability is of course, a bit difficult to measure. But in my opinion it is possible to develop fun tests where the examined person does not feel overwhelmed, disappointed or angry. Simply because the test procedure does not let that happen. For example, a lot of tests do have instructions to abort the test when 3 or more errors in a row are made. That is explicitly done to prevent stress and demotivation.

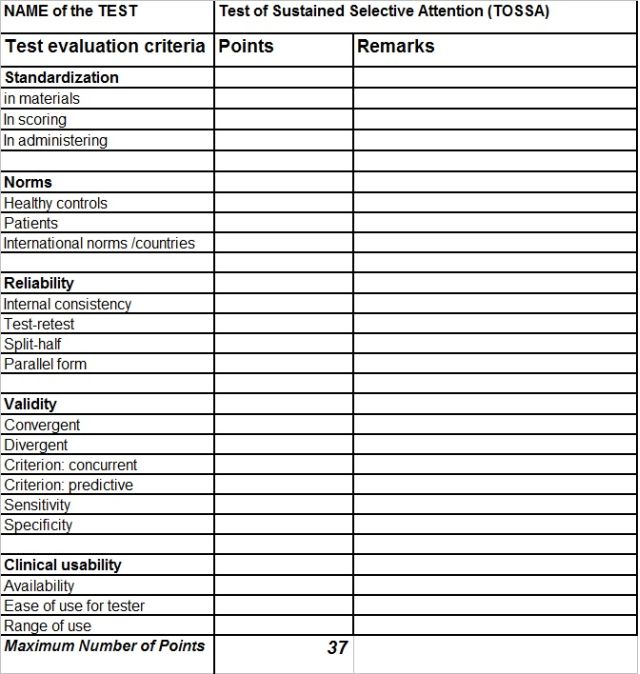

Table of Evaluation of modern neuropsychological tests

To evaluate a neuropsychological test several criteria can be set up. I have made a Table in which I use several criteria to evaluate neuropsychological tests. Most of these criteria are main-stream and are used in handbooks like Strauss, Sherman and Spreen (2006) as well.

However, I will use it here to give every test a certain number of points. The higher a test scores, the better the test is. I like to do this to be able to compare several tests with each other.

A classification system based on points has hopefully more appeal to users and stimulates test developers to improve or disapprove a test much quicker. Well, at least, I surely hope so.

This evaluation system I have put together is by no means final. I would like to start with it to stimulate a more critical view on tests and to trigger interactive discussions with users, researchers and developers. All in order to improve our neuropsychological arsenal of tests. I will add a comment based system on my test pages to stimulate such discussions and perhaps it will result in a handy and even better (international) test evaluation system. That would be nice. Although I am not naive: most scientists and/or developers are still very conservative...

Above: the new Table of evaluation criteria for neuropsychological tests

New test criteria explained

Standardization

A good test has to be identical in every place and time. Not only in materials, but also in the way of scoring and administering the test.

In materials:

0= Insufficient. Materials are very susceptible to

degrading due to heavy use or copying. Examples are tape recordings which are

played on many different tape recorders with different sounds and speeds. Also

tapes wear out and loose sound quality easily with heavy use. Materials are not

commercially available so all sorts of copies exist in many places. Stimuli can

therefore sound or look easily different from each other, and different from

the original stimuli used in norming studies.

1= Reasonable. Materials are commercially available in one format but still

very susceptible to wear and tear (such as tape recordings). Stimuli can still

sound or look different from each other, and different from the original

stimuli used in norming studies. Or: materials are only partly standardized in

a uniform format.

2= Good. All materials are commercially available in one format and hardly or

not susceptible to wear and tear. Examples are plastic materials, computer

software, sound or visual recordings on a computer.

In scoring:

0= Insufficient. The scoring system has not enough

clearcut instructions so multiple interpretations are possible, increasing the

risk of scoring differences between different testers. All scoring has to be

done by hand so errors are possible. Or the scoring by hand takes more than 20

minutes, increasing the risk of fatigue and errors.

1= Reasonable. The scoring system has clearcut instructions and multiple interpretations

are almost impossible. However, the scoring system has to be done by hand and

takes up to 20 minutes, still increasing the risk of fatigue and errors.

2= Good. The scoring system is completely automatized so no errors in scoring

are possible and it is done in less than 1 minute.

In administering:

0= Insufficient. The administering of the test is so

complicated that it requires quite some training to do it perfectly. This

increases the risk of errors. Or the administration has insufficiently clear

instructions so that the risk of different administrations is high.

1= Reasonable. The administration rules are partly (mostly) written out in the

manual. The risk of different administration formats is still present but by

using the manual fairly low.

2= Good. The instructions for administering the test are short and clearly

written in the manual or displayed on the computer screen. The risk of having

different formats in administering the test is very low.

Norms

A good test has to have sufficient solid norming data, and sufficient information should be presented to evaluate the norming studies.

Healthy controls

0= not available or norms can be considered too old and almost certainly not

valid anymore. Or no reported data.

1= available but less than 100 controls, age groups barely stratified, regional

representation not sufficient or biased, cell sizes across age groups differ

considerably or not all ages from 8 years up are represented.

2= available and groups are larger than 100 controls, fairly stratified,

regional representation fairly distributed, cell sizes across age groups are

fairly equally distributed and all ages from 8 up are represented.

Patient groups

0= not available or norms can be considered too old and almost certainly not

valid anymore. Or no reported data.

1= available but less than 100 patients, age groups barely stratified, no equal

distribution of sexes or educational level, regional representation not

sufficient or biased, cell sizes across age groups differ considerably or not

all ages from 8 years up are represented. Only 1 or 2 different patient groups

are represented.

2= available and groups are larger than 100 patients, fairly stratified both in

educational level and sexes equally distributed, regional representation fairly

distributed, cell sizes across age groups are fairly equally distributed and

all ages from 8 up are represented. More than 2 different patient groups are

available.

International norms / countries involved

0= norms only available for country of origin. Or no reported data.

1= norms also available in different languages and/or countries

Reliability

Internal consistency

0= Insufficient. Reliability coefficient lower or equal to .70. Or no reported

data.

1= Reasonable. Reliability coefficients between .70 and .85.

2= Good. Reliability coefficient higher or equal to .85.

Test-retest reliability

0= Insufficient. Reliability coefficient lower than .60. Or no reported data.

1= Reasonable. Reliability coefficients between or equal to .60 and .85.

2= Good. Reliability coefficient higher or equal to .85. Needed for decision

making on one patient.

Split-half reliability

0= Insufficient. Reliability coefficient lower than .60. Or no reported data.

1= Reasonable. Reliability coefficients between or equal to .60 and .85.

2= Good. Reliability coefficient higher or equal to .85. Needed for decision

making on one patient.

Parallel test reliability

See classification scheme for test-retest reliability

Validity

Convergent validity

0= Insufficient. Reliability coefficients between test and other similar tests

lower than .30. No factor-analysis or clustering of underlying factors has been

undertaken. No reported data.

1= Reasonable. Reliability coefficients between or equal to .30 and .40.

Factor-analysis has been done but sample smaller than 100.

2= Good. Reliability coefficient higher or equal to .40. Factor-analysis or

other analyses done to study underlying factors in groups larger than 100

patients.

Divergent validity

0= Insufficient. Reliability coefficients between test and other non-similar

tests show correlations averaging .40 or higher. Or no reported data.

1= Reasonable. Reliability coefficients are between or equal to .30 and .40.

2= Good. Reliability coefficients are lower than .30 between the test and

non-similar tests. The correlations clearly show a trend: lowering when tests

move away from the supposedly assessed domain.

Concurrent Validity and Predictive Validity

See the classification scheme for convergent validity

Sensitivity and Specificity

Depending on what the test is supposed to do, either detecting for certain a

specific disorder or ruling out a specific disorder, the values of sensitivity

or specificity can change.0= lower than 50% sensitivity and specificity; groups

are smaller than 100;

1= between 50 and 70% sensitivity and specificity; groups are smaller than 100;

2= higher than 70% sensitivity and specificity and groups are larger or equal

to 100.

Clinical usability or friendliness

Availability

0= not commercially available or availability very dependent on the good-will

of one person or organisation. No website available.

1= available as a pro-deo product by some passionate researchers or developers.

Only to be reached by normal mail. No website available to order the test.

2= Very easy to obtain due to a website with an order form. Usually

commercially available.

Ease of use for tester

0= Very complicated to administer, relatively large amount of time is needed to

master the test administration. Furthermore, test administration requires full

concentration to do all the different things perfectly.

1= Not so complicated to administer but some experience in test administration

is needed and computer or manual skills are required to do it perfectly.

2= No experience in test administration is required or the administration takes

less than 5 minutes to learn. Usually computer administration is that simple.

Range of use

0= only the best patient groups can quite easily do this test because of its

duration, complexity and ability to frustrate the patients.

1= Only suited for patients who have enough visual or motor capabilities.

2= Suited for most patient groups, even for the visual impaired or severely

intellectually impaired, or for children above the age of 8

Have A Great Story About This Topic?

Do you have a great story, remarks or any additions to or about this? One that could help other people as well and above all is constructive? Then please share it!

DISCLAIMER

I will not take any responsibility for how the information on this website will affect you. It always remains your responsibility to handle all information with care and in case of medical or mental problems you should ALWAYS consult a professional in your neighbourhood!

Ik neem geen enkele verantwoordelijkheid voor hoe de informatie op deze site u zal beïnvloeden. Het blijft altijd uw verantwoordelijkheid om al deze informatie zorgvuldig te bekijken. In het geval van lichamelijke en/of mentale problemen dient u ALTIJD een professional in uw directe omgeving te waarschuwen!